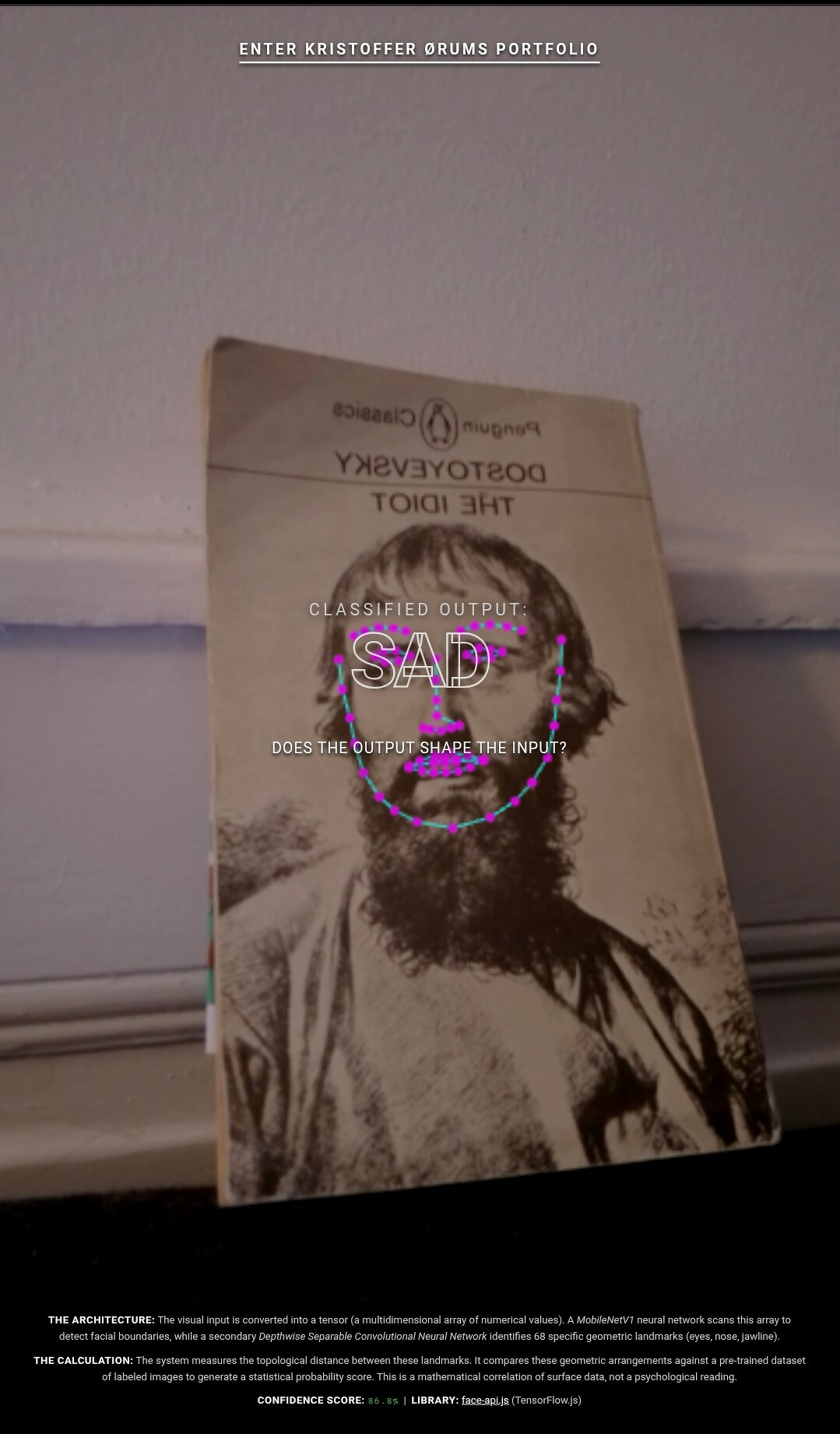

Is your expression a commodity? My new portfolio landing page is live at www.oerum.org. It is an interactive “Pattern Recognition Interface” that scans your face in real-time to classify your emotional state. Intention: We often anthropomorphize AI, believing it “sees” or “understands” us. This project strips that illusion away. It presents the algorithm for what it is: a statistical machine measuring surface geometry. The project questions the reduction of complex human affect into rigid taxonomies and highlights the friction between data (the map) and feeling (the territory). The Technology: The interface is built on face-api.js, an open-source library created by Vincent Mühler in 2018 to democratize facial recognition. It runs on top of TensorFlow.js, a machine learning engine developed by the Google Brain team that utilizes the user’s GPU directly in the browser. The system chains three specific neural networks to function: SSD MobileNet V1: A “Single Shot Detector” originally designed for mobile devices, used here to locate the bounding box of the face. FaceLandmark68Net: A model trained on labeled datasets to map 68 specific geometric points (jawline, eyes, nose) onto the face. FaceExpressionNet: A classifier using Depthwise Separable Convolutions to calculate the statistical probability that these geometric coordinates match a labeled emotion (e.g., “Happy” or “Sad”). Privacy: Because this runs on client-side TensorFlow, the surveillance is contained entirely within your own device. No biometric data is sent to the cloud. Experience the loop: www.oerum.org